RoboTeam Twente is a student team from the University of Twente that develops a team of autonomous soccer robots. Each year, they compete in the RoboCup in the small size league, where teams from all over the world put their robots to the test in a tournament. Small size league teams consist of 8 small robots that try to collaborate in order to get the orange golf ball in the opponent’s goal. Speed, precision and strategy are especially important in this league, as the games are typically fast-paced and chaotic. An accurate and low-latency tracking of all the robots and the ball is therefore of vital importance.

RoboTeam Twente's experiences

RoboTeam Twente's experiences

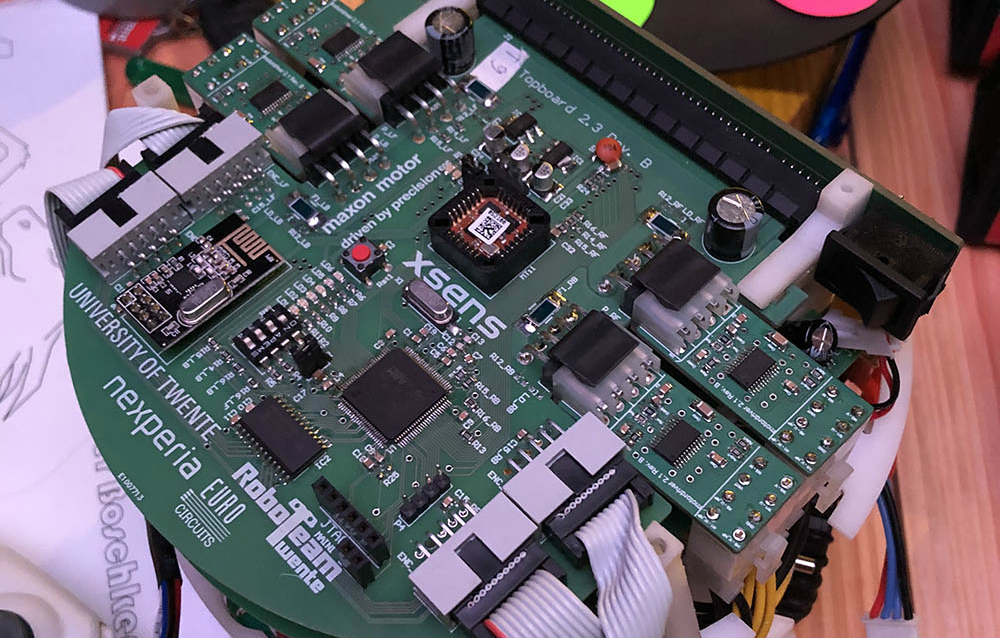

For all our tests and demos, we make use of the Xsens Attitude and Heading Reference System (AHRS) MTI-3, which is very suitable for reliably tracking our robots at low latency. Cameras track the robots from above. The vision software that we use is called SSL-Vision, which is specifically designed for this competition. It recognizes the colored marker patterns on top of the robots (see the figure on the side), and links these to information about the robots ID and team. The position of the markers in the captured images are geometrically transformed to field coordinates, so for each robot (and the ball) we receive information about its position and yaw.

The software is already quite efficient, but still computer vision always introduces significant latency. From measurements on our setup we found roughly 80ms of delay between sending a command to a robot, and measuring the resulting movement in our software. For tactical decision making this is not a significant problem. However, for the stability of our positional control loops and obstacle avoidance, this delay turned out to be detrimental. To ensure stability, we had to make concessions on performance and responsiveness of our robots in e.g. obstacle avoidance situations.

Lower latency and higher frequency

To improve our situation, we decided to equip each robot with the AHRS, which would give us information about its orientation and accelerations with much lower delay and a higher frequency. Using the yaw and x, y acceleration measurements, we could use sensor fusion to combine the speed of these measurements with the accuracy of the vision measurements. For this end, we designed a discrete Kalman filter (DKF), running at 100 Hz (the same frequency as we read from the AHRS), to obtain fast and accurate estimations of the pose and velocity of each robot. In this design it was especially important to correct for the delay between the AHRS and vision measurements, which further improved our estimations.

Figures

The most important gain of fusing the sensors is summarized in the plots in figures on the right. The errors in our pure vision measurements, caused by the 80ms delay, are compared against the errors in the sensor fusion estimations. We saw that on average, when applying the DKF, the positional error caused by the delay is reduced by roughly a factor of 5. For the velocity estimation the improvement was not as huge, but still significant. In addition, it led to filtering out the sharpest peaks in the velocity estimation.

Are you interested in our solutions? Find out more about the MTi-3 AHRS: