Kite & Lightning is a cinematic VR company known for creating immersive computer-generated worlds that blend interactive gaming, social and story. They became known for their original emotionally charged transformative experiences such as the award-winning VR Mini Opera Senza Peso.

Full-body and facial performance capture

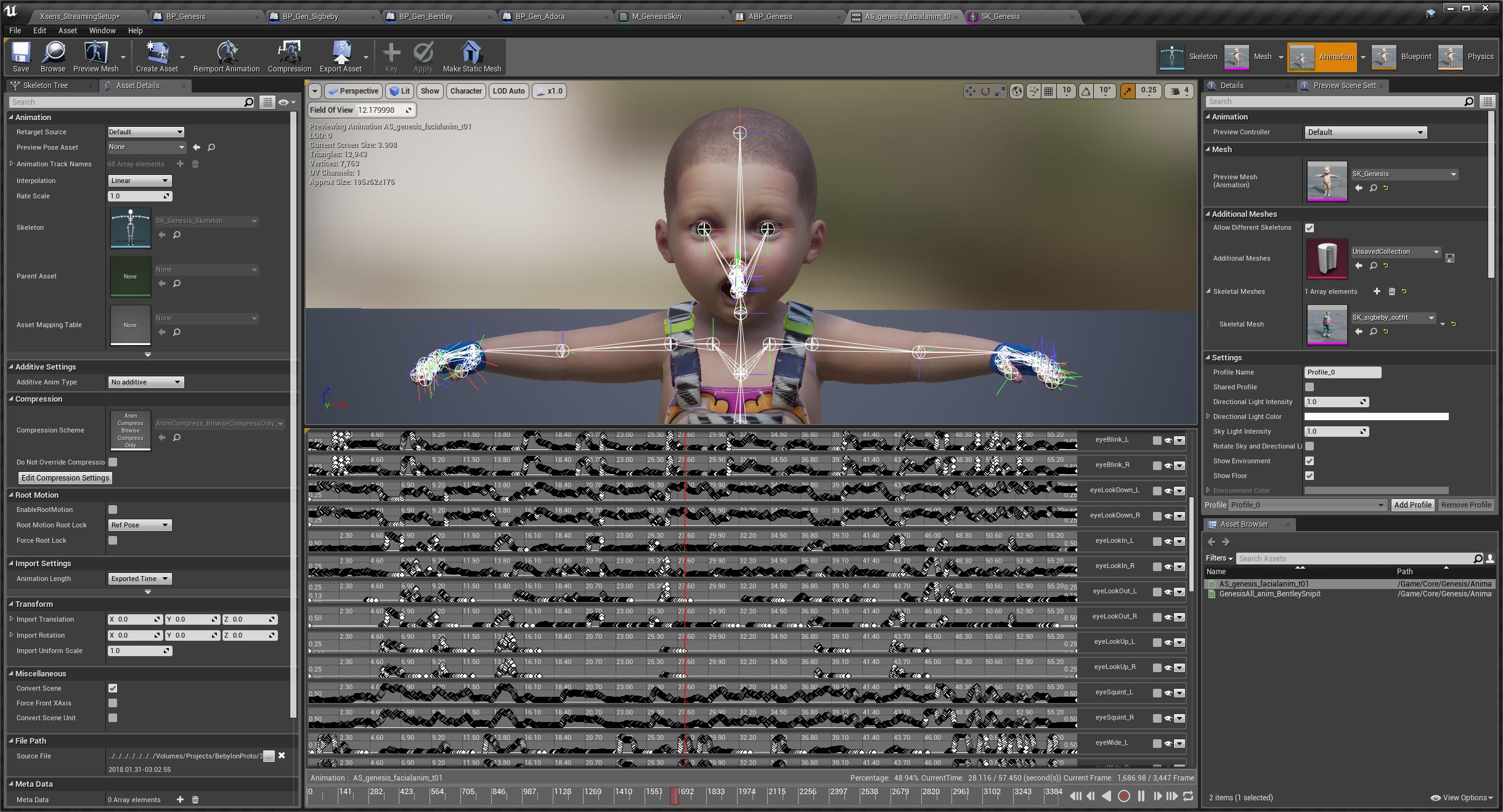

Cory Strassburger – co-founder of Kite & Lightning – utilizes an iPhone X in tandem with Xsens MVN Animate to create simultaneous full-body and facial performance capture. The final animated character can be live streamed, retargeted and cleaned via IKINEMA LiveAction to Epic Games’ Unreal Engine – all in total real time. This was used to create their upcoming game ‘Bebylon’.

“Thanks to recent technology innovations, we now have the ability to easily generate high-quality full-performance capture data and bring our wild game characters to life – namely the iPhone X’s depth sensor and Apple’s implementation of face tracking, coupled with Xsens and the amazing quality they've achieved with their inertial body capture systems. Stream that live into the Unreal Engine via IKINEMA LiveAction and you've got yourself a very powerful and portable mocap system.”

Any place

Corry showed at SIGGRAPH 2018 how the simple, DIY set up – powered by accessible technology – can power real-time character capture and animation. According to him the new approach to motion capture does not rely on the process of applying markers or setting up multiple cameras for a mocap volume. It rather relies only on a Xsens MVN system, a DIY mocap helmet with an iPhone X directed at the user’s face and IKINEMA LiveAction to stream and retarget the motion to ‘Beby’ in Unreal Engine. Via this setup, users can act out a scene wherever they are.

Xsens Motion Capture Data Files

Are you actively looking for a motion capture system? Download Xsens motion capture data files to convince you about the quality of our data.